Putting together the RuneScape Wiki

This post was originally written on Medium.

Last year, a group of awesome wiki editors and I forked the RuneScape and Old School RuneScape wikis from FANDOM/Wikia to an independent site. It was a monumental task that took a lot of time and effort, not least because of the insanely large amount of pages and revisions we have on the wikis (we had to import over 20 million revisions just for the RS Wiki!).

Here's how we did it.

Initial steps

When we first discussed forking the wikis back in late 2017, it was difficult to imagine how we were going to pull off such a move, though it was exciting to think about. The first hurdle was determining what kind of infrastructure we needed. The wikis use MediaWiki, an open-source wiki software developed in PHP. We couldn’t really diverge from this because we needed to maintain compatibility with the data from Wikia we would be porting, but it’s not like we actually wanted to either because MediaWiki is by far the most complete wiki software package available right now.

However, MediaWiki is quite old. It still gets very regular updates, but it relies on PHP, which can be slow, and there’s certainly some outdated code within the software that could do with some performance improvements. To compensate, we needed to make sure that we tuned the performance of MediaWiki, PHP, and other software we were using to ensure fast response times on the wikis and to decrease the chance of downtime.

To host the wikis, we needed servers that were fairly powerful and would be reliable. At first, we played around with the idea of using AWS EC2 instances, as it would’ve allowed us to scale up our infrastructure more easily, but the high costs associated with AWS made this a no-go at the time. We decided to settle on purchasing dedicated servers rather than a cloud-based solution. After setting them up with the basic software we’d need, such as a LAMP stack, we were ready to import the data over from the old Wikia sites.

It took several days to completely import Wikia’s data, mostly because some of the data was inconsistent, or missing. We plugged the gaps by scraping data in a number of different ways, such as by utilising the MediaWiki API on Wikia’s site. Wikia use a really old version of MediaWiki, so we had to put together our own tools to grab log entries and other data that are not included in their public dumps. Some of our archived files had been deleted by Wikia, so in some of those cases we even had to utilise archive.org to find them.

One of the important technical goals was to make it as easy as possible for people to migrate their accounts over to the forked site, so that nobody lost the record of their existing contributions. Importing users ended up being a relatively easy task. We filled our user database with “dummy users” based on the usernames assigned to the revisions we had imported, and thanks to some scripts and an extension] by the owner of another forked site WikiDex, we were able to provide a method for those users to claim their old accounts. Users can simply login with their Wikia credentials, and we authenticate and move their account over.

The Great Merge

We had originally planned to launch the new wikis in January 2018, as a nice way to start the new year. Due to legal delays, our launch date had to be pushed back several times, which meant that we had diverged from the data that was on Wikia.

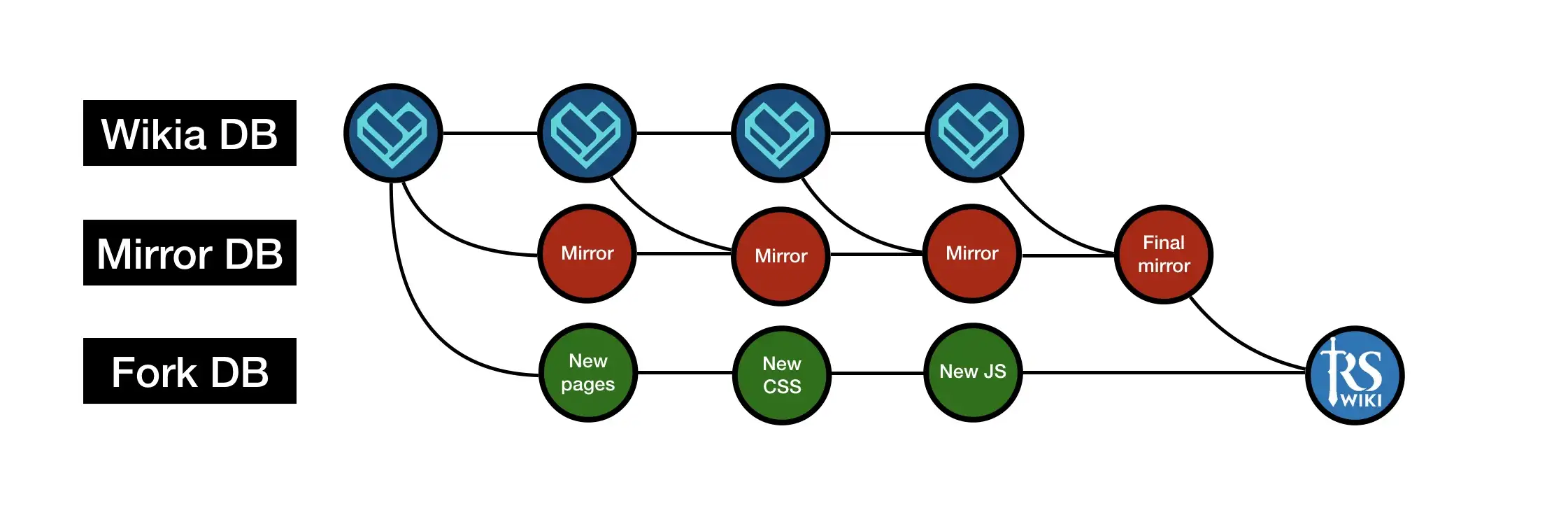

To make sure we were staying up to date with the content on the old wikis, we setup new databases that essentially acted as mirrors of the Wikia sites. The plan was simple: when we were ready to launch, we’d “merge” the new pages we’d made on the fork wikis with the last versions we pulled from Wikia in these new databases. To do this, we scraped Wikia’s recent changes feed and imported what we needed. This had to be done very frequently, because they do not keep a very long record of the recent changes on their wikis.

After we had put together the CSS for the wikis, and we had official support from Jagex, we were ready to begin the process of preparing for our launch. A group of us met up in Cambridge, UK for a whole week and worked through several days to undertake final preparations, which included stress testing, ensuring our extensions worked, and checking that our last Wikia mirror was not incomplete.

We were fortunate in that we weren’t really invested in Wikia’s ecosystem when we were using them as a host. Despite their best efforts, we never adopted their “portable infoboxes”, or enabled features like message walls and article comments. This meant that we didn’t have to worry much about maintaining compatibility when moving the wikis across, because the majority of features that we used on Wikia are available as open-source MediaWiki extensions.

On October 1st, the day before we launched, we began a process of merging our mirror site into our actual fork, where we had created new pages, CSS, and scripts exclusively for use on the new site. This was mostly a manual job, and was worked on by a number of wiki admins into the night.

The next afternoon, we went live and watched as our servers got bombarded with thousands of requests. Our launch didn’t go off completely as planned, because the high load resulting from an in-game broadcast in RuneScape took our servers down temporarily. We setup additional servers to prevent this downtime happening again in the future.

How to reduce your database size by 95%

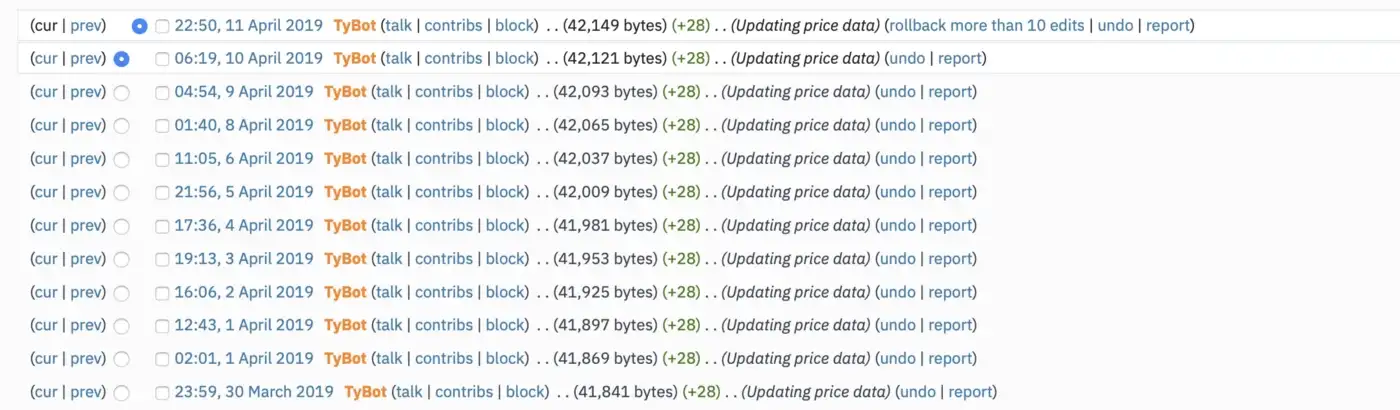

An important part of the wikis is our Grand Exchange data, as it is used to display prices on item pages and in other pages like our money making guides too. Every 24-48 hours, a bot updates every item’s data on the wiki with the timestamp and current price, creating a new revision record which contains the full content of the page, despite the bot only inserting one line per data page.

As you can guess, for pages with an extensive price history, this means we have a lot of old revisions full of this data which is exponentially getting bigger every time the bot updates item prices.

We decided to remove the majority of our older revision entries for the Grand Exchange data. It wasn’t really necessary to keep an historical record of all of these revisions in a way that we would for normal pages, and by doing this we didn’t lose any of our historical Grand Exchange data because we don’t replace the old timestamps and prices when our bot edits the pages, we just append to them. We only ever need the latest revision of the data pages to show you all of our Grand Exchange data.

It was a no-brainer to delete the older entries, and doing so resulted in a decrease in the size of our database by up to 95%! To prevent us needing to do this again, we’re currently developing a way to store and interact with this data in a more abstracted way, rather than through MediaWiki, which is something we couldn’t do when we were hosted on Wikia.

Self-inflicted wounds

One of the major issues we encountered soon after launching is that we were taking down our own website on occasions, mostly notably when we ran automated tasks to backup our data to AWS, due to really high CPU usage and memory allocation. Over the course of a few days, we lowered the amount of memory available for use by these backup scripts, until we were happy that it was not going to cause more issues. This means that our backups take quite a while to finish — a full backup can take the course of a full day to complete — but it is a price worth paying to ensure that we do not cause issues for our servers.

Over time, we also developed issues with Redis-based job queue. MediaWiki’s internal job queue is used to delay non-critical tasks until such a time that it is appropriate to run them. For us, these tasks included updating links between pages and templates, and also our semantic data. We use an extension called Semantic MediaWiki a lot throughout both wikis, including for powering the data shown on monster drop tables. If the job queue wasn’t running these tasks quick enough, pages could show outdated or wrong data for a while.

At one point, we had 5.4 million jobs in our job queue just on the RuneScape Wiki, most of which were added as a result of people editing high-use templates on the wiki. It was clear that our job runner application wasn’t keeping up with demand, so we looked into making a number of changes to deal with this, which included purchasing another server which would be dedicated to running jobs.

Adding some flair

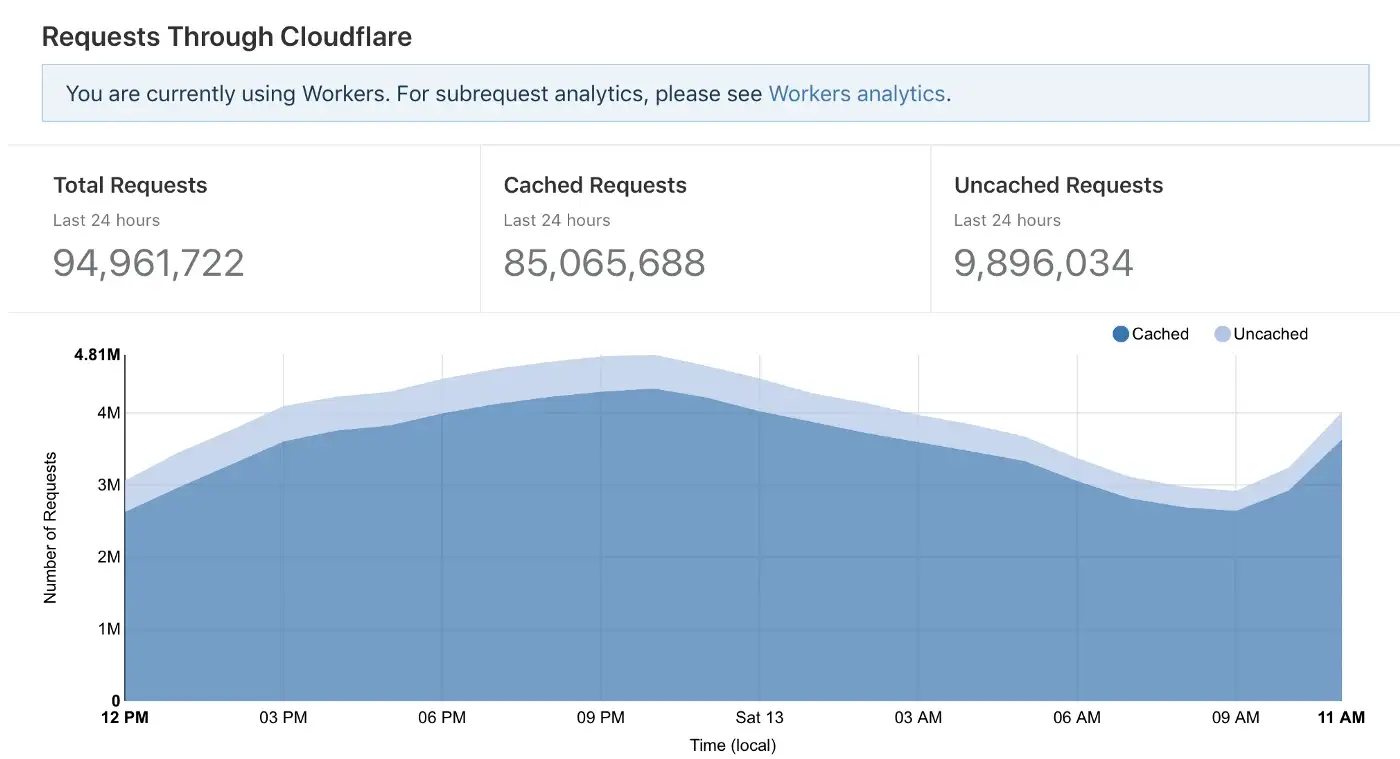

It was important for pages to load fast, not just because it helps improve our ranking on search engines like Google, but because it provides a better experience for people using the wiki. We also needed to ensure that the wikis could handle the millions of page views we get per day. We couldn’t really rely on serving our content from a tiny range of locations around the world, so we swiftly decided to utilise CloudFlare’s CDN.

Using CloudFlare with MediaWiki isn’t a straight forward feat, because MediaWiki doesn’t really support it out of the box. We developed custom solutions that would purge CloudFlare’s CDN cache whenever a page or file was edited (or “touched”) on the wikis.

We spend a little bit extra for more page rules and we also utilise another nifty feature of CloudFlare, which is the ability to load balance between origin servers. Doing this has almost certainly saved our bacon a number of times in preventing a single point of failure when one of our web servers experience an unforeseen problem.

CloudFlare has proven itself to be extremely useful and has saved us a lot of money. I would highly recommend using it for your future projects, no matter what the scale of it is.

After the launch

Over the months since launching the wikis, we have worked a lot on improving user experience. We have made substantial optimisations and changes including:

- Caching some pages for longer using CloudFlare’s page rules and adding anonymous user caching using their Workers feature

- Purchasing additional domain names to provide a quick way to access the wikis (including rs.wiki, osrs.wiki, and oldschool.wiki)

- Migrating our SQL connections to ProxySQL to decrease load time and improve future scalability

- Implementing a dark mode for both wikis which relies on cookies

- Working with 3rd party clients for OSRS, including RuneLite and OSBuddy, to add new wiki-related features utilising custom-built APIs

We also welcomed the RuneScape Classic wiki and the Portuguese RuneScape Wiki to our wiki family, and we’d highly recommend checking them out.

What’s next?

In the future, we’re planning to add even more features to both wikis for RuneScape players and wiki editors to enjoy.

Interactive maps

At RuneFest 2018, we presented a demo of a work-in-progress interactive map for RuneScape. This is available to view online, and we’re excited to say that integrated interactive maps will start rolling out across the Old School RuneScape Wiki soon.

More page caching!

We’re always looking at ways to improve caching across our site to lower load times as much as we can, as we know that even a page taking an extra second or two to fully render can be frustrating.

Client integration

There’s now a link to the OSRS Wiki from the official Old School game client, but we’re not planning to stop there. We’re working with Jagex on exploring more ways to integrate the wiki into both RuneScape and Old School, and to make it easier for players to quickly access the information they need. We don’t have any ETAs for this though, so keep an eye out for updates in the future.

If you’re looking to fork a wiki and would like some advice, please feel free to contact us via this link. We’re more than happy to give guidance.

© 2023 Jayden Bailey. Built with Next, Tailwind, and Cloudflare.